Theory-based Causal Transfer: Integrating Instance-level Induction and Abstract-level Structure Learning

Mark Edmonds1,4, Xiaojian Ma1, Siyuan Qi1,4, Yixin Zhu2,4,Hongjing Lu2,3, Song-Chun Zhu1,2,4

1 Department of Computer Science, UCLA | 2 Department of Statistics, UCLA | 3 Department of Pyschology, UCLA

4 International Center for AI and Robot Autonomy (CARA)

Abstract

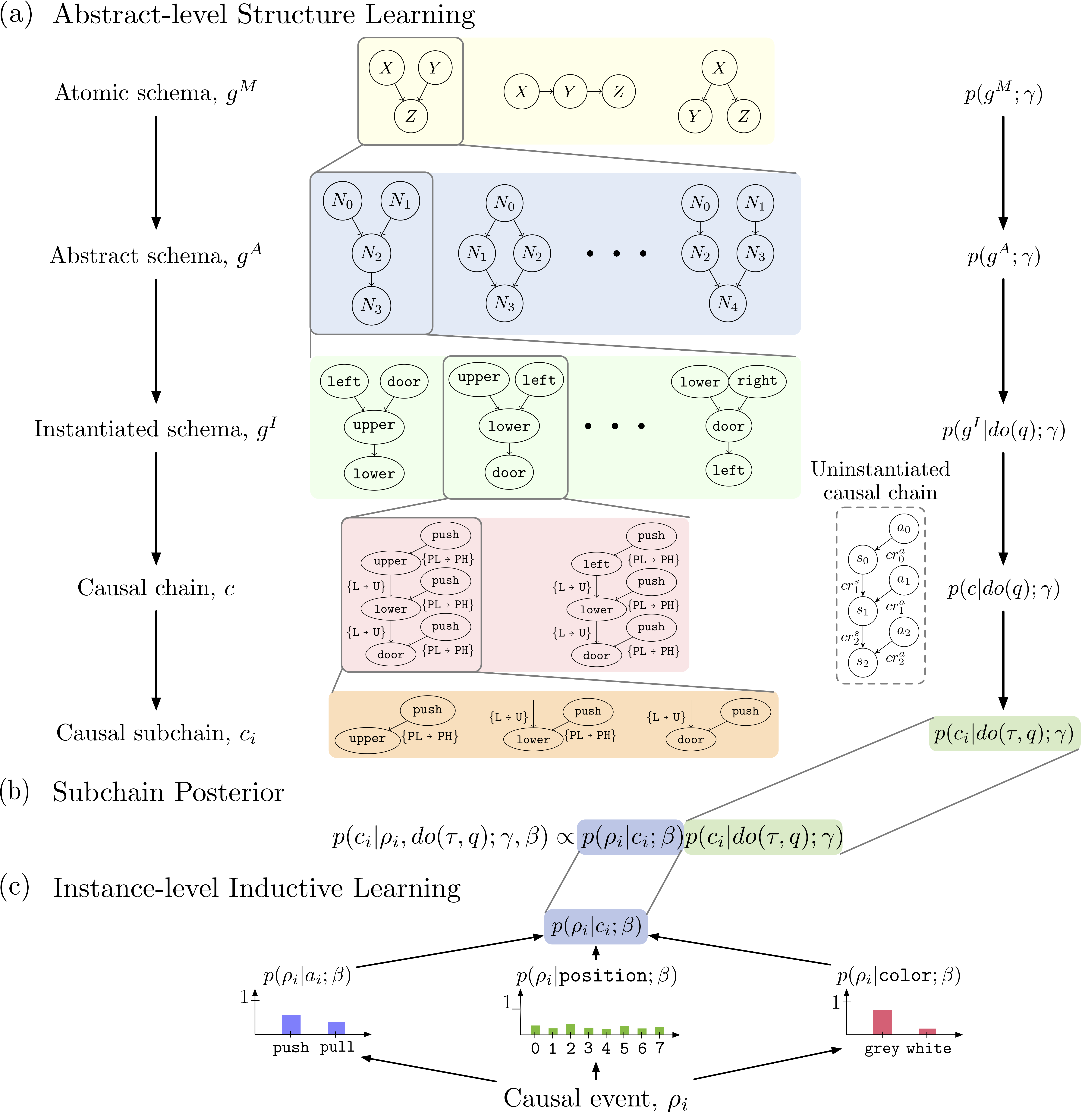

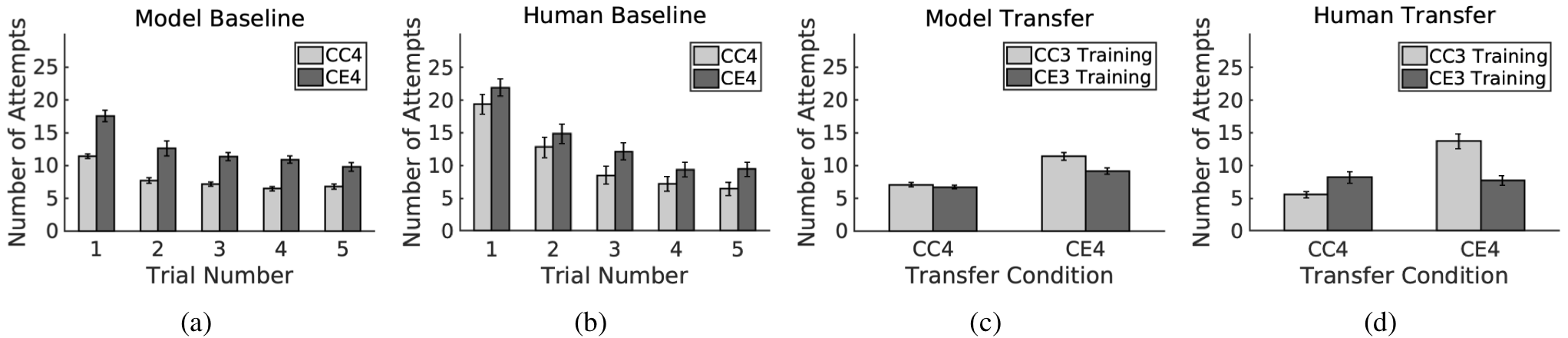

Learning transferable knowledge across similar but different settings is a fundamental component of generalized intelligence. In this paper, we approach the transfer learning challenge from a causal theory perspective. Our agent is endowed with two basic yet general theories for transfer learning: (i) a task shares a common abstract structure that is invariant across domains, and (ii) the behavior of specific features of the environment remain constant across domains. We adopt a Bayesian perspective of causal theory induction and use these theories to transfer knowledge between environments. Given these general theories, the goal is to train an agent by interactively exploring the problem space to (i) discover, form, and transfer useful abstract and structural knowledge, and (ii) induce useful knowledge from the instance-level attributes observed in the environment. A hierarchy of Bayesian structures is used to model abstract-level structural causal knowledge, and an instance-level associative learning scheme learns which specific objects can be used to induce state changes through interaction. This model-learning scheme is then integrated with a model-based planner to achieve a task in the OpenLock environment, a virtual "escape room" with a complex hierarchy that requires agents to reason about an abstract, generalized causal structure. We compare performances against a set of predominate model-free RL algorithms. RL agents showed poor ability transferring learned knowledge across different trials. Whereas the proposed model revealed similar performance trends as human learners, and more importantly, demonstrated transfer behavior across trials and learning situations.