Selected Figures

Mark Edmonds1, Feng Gao2, Hangxin Liu1, Xu Xie2, Siyuan Qi1, Brandon Rothrock 3, Yixin Zhu2, Ying Nian Wu2, Hongjing Lu2,4, Song-Chun Zhu1,2

1 Department of Computer Science, UCLA | 2 Department of Statistics, UCLA | 3 Jet Propulsion Lab, Caltech | 4 Department of Pyschology, UCLA

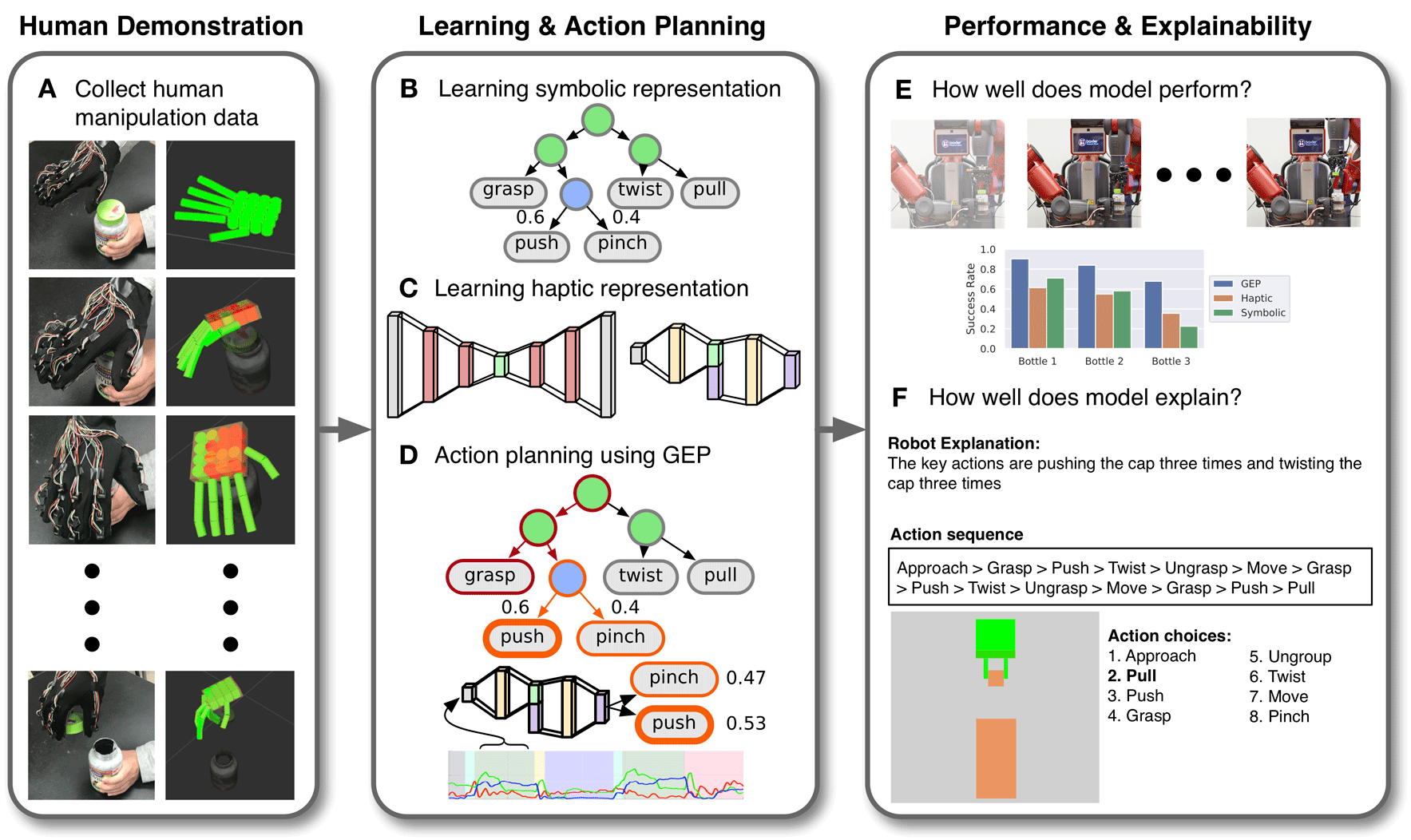

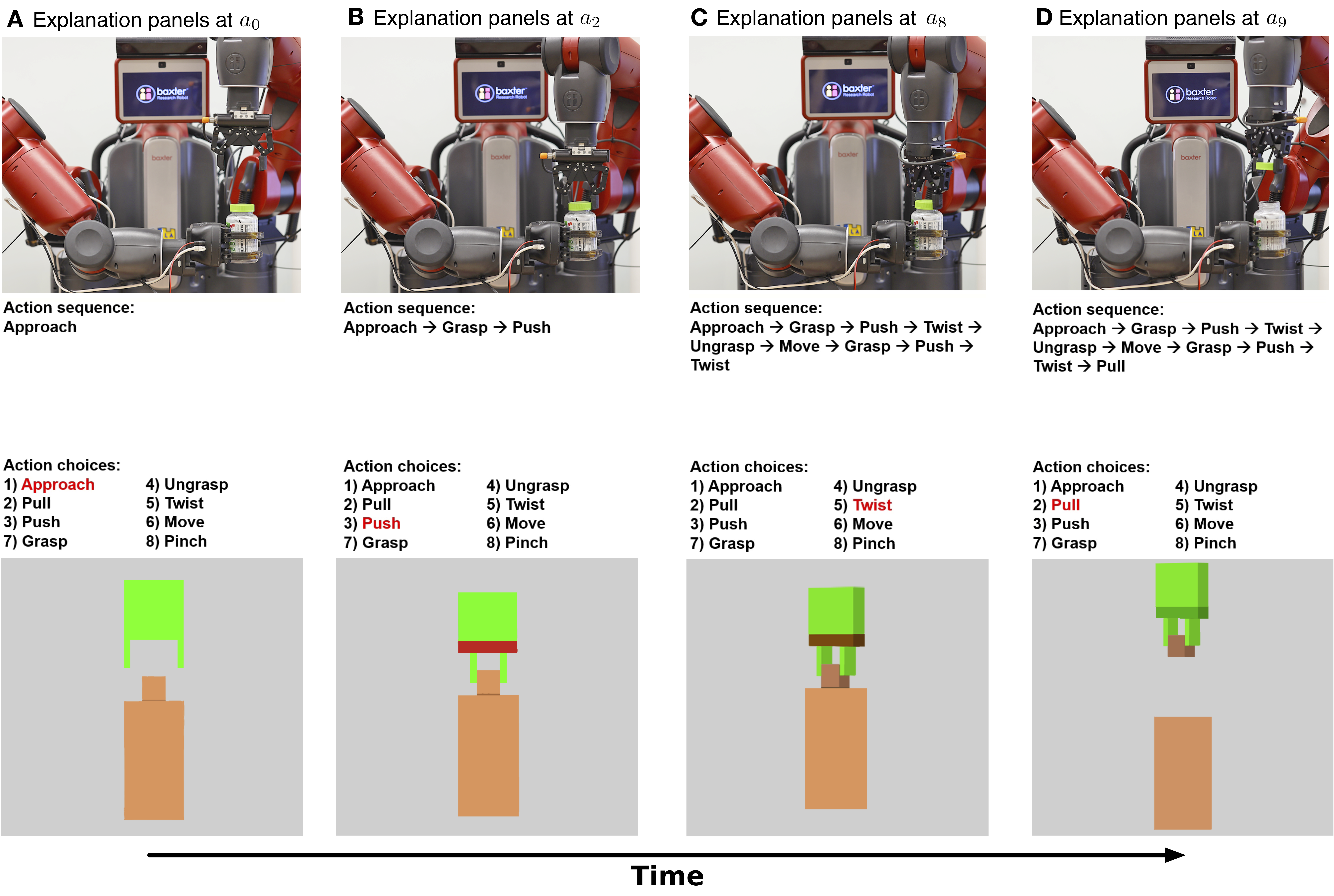

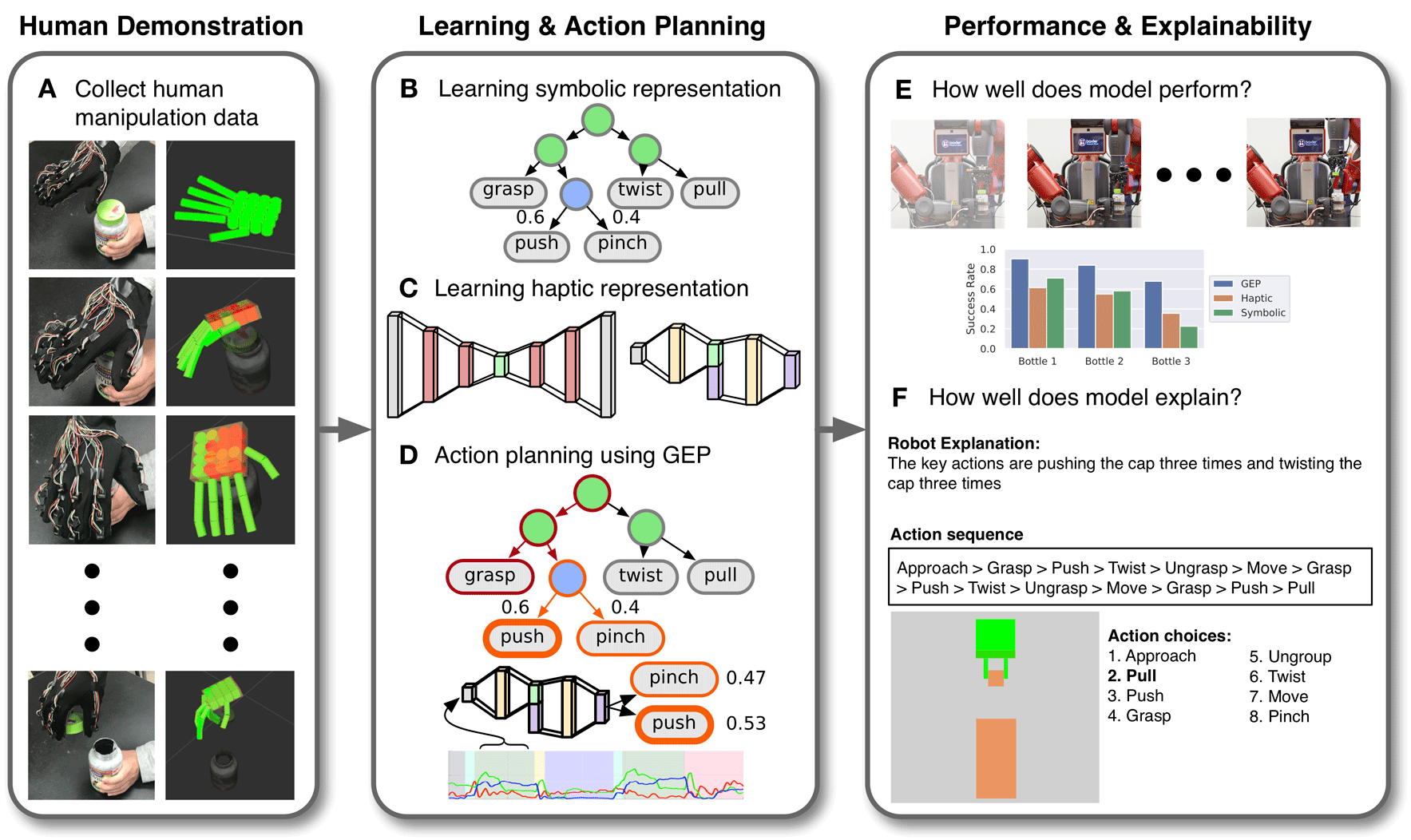

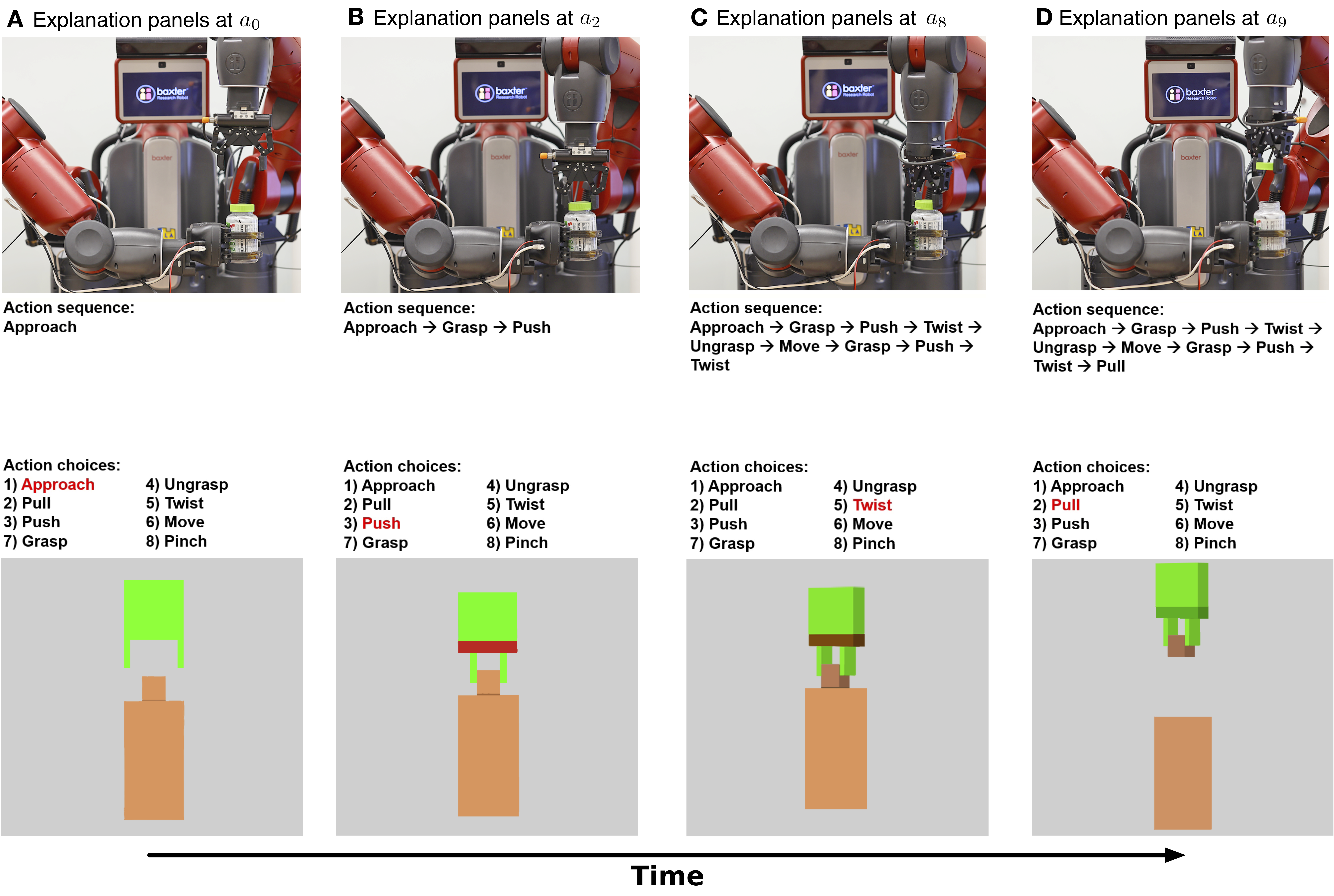

The ability to provide comprehensive explanations of chosen actions is a hallmark of intelligence. Lack of this ability impedes the general acceptance of AI and robot systems in critical tasks. This paper examines what forms of explanations best foster human trust in machines and proposes a framework in which explanations are generated from both functional and mechanistic perspectives. The robot system learns from human demonstrations to open medicine bottles using (i) an embodied haptic prediction model to extract knowledge from sensory feedback, (ii) a stochastic grammar model induced to capture the compositional structure of a multistep task, and (iii) an improved Earley parsing algorithm to jointly leverage both the haptic and grammar models. The robot system not only shows the ability to learn from human demonstrators but also succeeds in opening new, unseen bottles. Using different forms of explanations generated by the robot system, we conducted a psychological experiment to examine what forms of explanations best foster human trust in the robot. We found that comprehensive and real-time visualizations of the robot’s internal decisions were more effective in promoting human trust than explanations based on summary text descriptions. In addition, forms of explanation that are best suited to foster trust do not necessarily correspond to the model components contributing to the best task performance. This divergence shows a need for the robotics community to integrate model components to enhance both task execution and human trust in machines.

@article{edmonds2019tale,

title={A tale of two explanations: Enhancing human trust by explaining robot behavior},

author={Edmonds, Mark and Gao, Feng and Liu, Hangxin and Xie, Xu and Qi, Siyuan and Rothrock, Brandon and and Zhu, Yixin and Wu, Ying Nian and Lu, Hongjing and Zhu, Song-Chun},

journal={Science Robotics},

volume={4},

number={37},

pages={eaay4663},

year={2019},

publisher={AAAS}

}